Understanding

Artificial intelligence, or AI, refers to the simulation of human intelligence by software-coded heuristics. It is a wide-ranging branch of computer science concerned with building smart machines capable of performing tasks that typically require human intelligence.

Machine learning (ML) is a type of AI that allows software applications to become more accurate at predicting outcomes without being explicitly programmed to do so. Machine learning algorithms use historical data as input to predict new output values.

Deep learning is a subset of machine learning, which is essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain — albeit far from matching its ability — allowing it to “learn” from large amounts of data.

Understanding AI

Artificial intelligence, or AI, refers to the simulation of human intelligence by software-coded heuristics. It is a wide-ranging branch of computer science concerned with building smart machines capable of performing tasks that typically require human intelligence. Artificial intelligence allows machines to model, or even improve upon, the capabilities of the human mind.

Artificial intelligence is discussed everywhere. It has been represented and discussed in popular media like films and television, as well as in extensive and potentially fear inducing talks saying that its existence could spell the end of our times. But for certain, AI is very real and is a really helpful tool for use humans.

The way artificial intelligence (AI) is already incorporated into our daily life is well-known to many of us: Below is a collection of cases we compiled, excluding other noteworthy examples not included in this article:

Drift uses chatbots, machine learning and natural language processing to help businesses book more meetings, assist customers with product questions and make the sales cycle more efficient.

X, formerly known as Twitter, has algorithms that direct users to people to follow, tweets and news based on a user’s individual preferences. Additionally, X uses AI to monitor and categorize video feeds based on subject matter.

AMP designs, engineers and manufactures robotic systems for recycling sites. Because the robots, which are powered by AI, can quickly discern variations in materials — like material type, shape, texture, color and logos — they can digitize and efficiently process every object that comes through a recycling facility, targeting specific items on conveyor belts as needed.

iRobot is probably best known for developing Roomba, the smart vacuum that uses AI to scan room size, identify obstacles and remember the most efficient routes for cleaning. The self-deploying Roomba can also determine how much vacuuming there is to do based on a room’s size, and it needs no human assistance to clean floors.

Softbank Robotics developed a humanoid robot known as Pepper, which is equipped with an “emotion engine” that makes it “capable of recognizing faces and basic human emotions.” Standing at 4 feet tall. Pepper can operate in more than a dozen languages and has a touch screen attached to support communication.

PathAI creates AI-powered technology for pathologists. The company’s machine learning algorithms help pathologists analyze tissue samples and make more accurate diagnoses. The aim is to not only improve diagnostic accuracy, but also treatment. PathAI’s technology can also identify optimal clinical trial participants.

Atomwise is using AI and deep learning to facilitate drug discovery. Atomwise’s algorithms have helped tackle some of the most pressing medical issues, including Ebola and multiple sclerosis.

Tesla has four electric vehicle models on the road with autonomous driving capabilities. The company uses artificial intelligence to develop and enhance the technology and software that enable its vehicles to automatically brake, change lanes and park.

Why Is Artificial Intelligence Important?

Artificial intelligence (AI) seeks to give computers human-like processing and analyzing skills so that it can function as a helpful tool alongside people in daily life. AI is able to comprehend and sort data at scale, solve hard problems and automate numerous processes simultaneously, which can save time, effort and fill in operational gaps ignored by humans. AI is the cornerstone of computer learning and is utilized in nearly every sector of the economy, including manufacturing, healthcare, and education, to support data-driven decision-making in business and to complete labor-intensive or repetitive jobs.

Artificial intelligence is a common technology used to improve user experiences. Online platforms with recommendation engines, automobiles with autonomous driving capabilities, and cell phones with AI assistants are examples of it. Through its leadership in healthcare and climate projects, as well as its piloting of robotics for hazardous tasks and fraud detection, AI also contributes to public safety.

Strong AI vs. Weak AI

It’s common to distinguish between weak and strong artificial intelligence. Weak Artificial Intelligence, often known as Narrow AI, is AI that performs specified tasks automatically and usually outperforms humans while having limitations. Although it is still speculative, Strong Artificial Intelligence, often known as Artificial General Intelligence, refers to AI that can mimic human learning and thought processes.

Weak AI

Also called narrow AI, weak AI operates within a limited context and is applied to a narrowly defined problem. It often operates just a single task extremely well. Common weak AI examples include email inbox spam filters, language translators, website recommendation engines and conversational chatbots.

Strong AI

Often referred to as Artificial General Intelligence (AGI) or simply General AI. Strong AI describes a system that can solve problems it’s never been trained to work on, much like a human can. AGI does not actually exist yet. For now, it remains the kind of AI we see depicted in popular culture and science fiction.

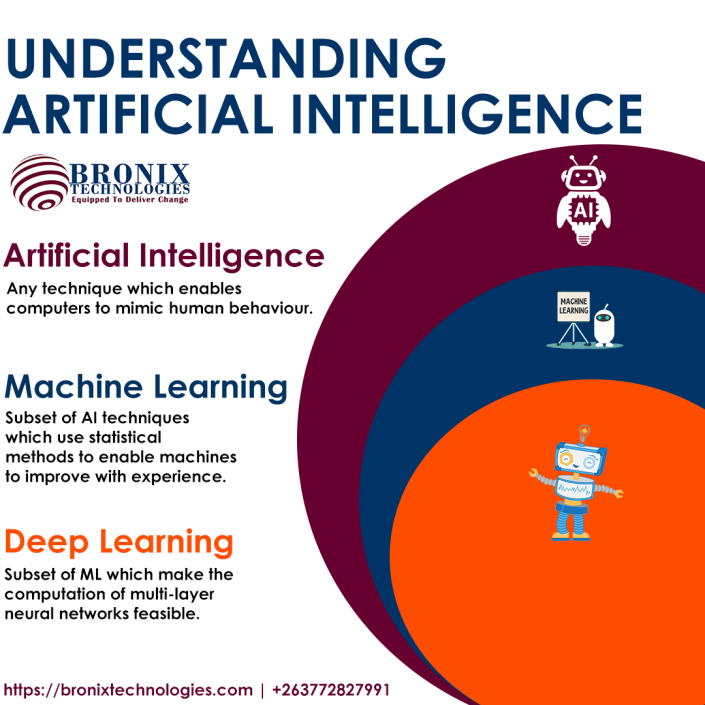

Differences between AI, machine learning and deep learning

In enterprise IT, the phrases artificial intelligence (AI), machine learning (ML), and deep learning are frequently used synonymously, particularly by businesses in their marketing collateral. However, there are differences. AI, which was first used in the 1950s, describes how computers may mimic human intelligence. It encompasses a constantly evolving range of functions as new technologies are created. AI encompasses a range of technologies, including deep learning and machine learning.

Software programs can become increasingly accurate outcome predictors without being specifically trained to do so thanks to machine learning. In order to forecast new output values, machine learning algorithms use historical data as input. With the availability of larger training data sets, this strategy became much more successful. One kind of machine learning called deep learning is predicated on our comprehension of the organization of the brain. Recent developments in artificial intelligence, such as ChatGPT and self-driving automobiles, are based on deep learning’s utilization of artificial neural network architecture.

What is the history of AI?

The concept of “a machine that thinks” originated in classical Greece. However, significant occasions and turning points in the development of artificial intelligence since the invention of electronic computing (and in relation to some of the subjects covered in this article) include the following:

1940s

The concept that a computer’s program and the data it processes can be stored in the computer’s memory was developed by Princeton mathematician John Von Neumann, who also designed the architecture for the stored-program computer. Additionally, Walter Pitts and Warren McCulloch established the groundwork for neural networks.

1950

Computing Machinery and Intelligence was published by Alan Turing (link goes outside of ibm.com). In this work, Turing—often referred to as the “father of computer science” and famed for cracking the German ENIGMA code during World War II—poses the query, “Can machines think?” Next, he presents what is now referred to as the “Turing Test,” in which a human interrogator attempts to discern between a computer-generated text response and a human-written response. Despite intense criticism since its publication, this test continues to be a significant milestone in the development of artificial intelligence and a living philosophical concept due to its utilization of linguistic concepts.

1956

John McCarthy, who would later establish the Lisp language, first uses the term “artificial intelligence” during the inaugural AI conference held at Dartmouth College. Later that year, Allen Newell, J.C. Shaw, and Herbert Simon develop the Logic Theorist, the first AI software program ever to run.

1967

The Mark 1 Perceptron, the first computer built on a neural network that “learned” by trial and error, was built by Frank Rosenblatt. A year later, the seminal work on neural networks, Perceptrons, by Marvin Minsky and Seymour Papert, is published. It serves as a critique of future neural network research endeavors as well as a seminal work on neural networks.

1980s

Artificial intelligence (AI) applications increasingly use neural networks that train themselves via a backpropagation technique.

1995

Artificial Intelligence: A Modern Approach, written by Stuart Russell and Peter Norvig (link outside of ibm.com), is published and quickly rises to prominence as a textbook for AI research. In it, they explore four possible objectives or definitions of artificial intelligence (AI), which distinguishes computer systems according to their reasoning and whether they act or think.

1997

In the first and second chess matches, IBM’s Deep Blue defeated former world champion Garry Kasparov.

2004

In a paper titled “What Is Artificial Intelligence?” (link lives outside of IBM.com), John McCarthy puts out a definition of AI that is frequently used.

2011

IBM Watson beats champions Ken Jennings and Brad Rutter at Jeopardy!

2015

Convolutional neural networks are a particular type of deep neural network that Baidu’s Minwa supercomputer uses to detect and categorize photos more accurately than the average person.

2016

In five games, DeepMind’s AlphaGo program—which uses a deep neural network—beats world Go champion Lee Sodol. Considering that there is an enormous amount of moves that can be made in the game—more than 14.5 trillion after just four moves—the triumph is noteworthy. Later, DeepMind was acquired by Google for an alleged $400 million USD.

2023

A huge improvement in AI performance and its capacity to deliver enterprise value is brought about by the advent of large language models, or LLMs, like ChatGPT. Deep-learning models can be pre-trained on enormous volumes of unlabelled, raw data using these new generative AI techniques.

What are the applications of AI?

Artificial intelligence has made its way into a wide variety of markets. Here are some examples.

The largest stakes are on lowering expenses and enhancing patient outcomes. Businesses are using machine learning to diagnose patients more quickly and accurately than doctors can. IBM Watson is among the most well-known healthcare technology. It has the ability to comprehend plain language and reply to inquiries posed to it. The system generates a hypothesis by analyzing patient data along with information from external sources, which it then presents along with a confidence grading schema. Additional AI uses include the use of chatbots and virtual health assistants on the internet to aid patients and healthcare consumers with finding medical information, making appointments, comprehending the billing process, and completing other administrative tasks. Pandemics like COVID-19 are being predicted, combated, and understood with the help of a variety of AI technologies.

Grading can be automated by AI, freeing up teachers’ time for other projects. Students can work at their own pace with its ability to assess them and adjust to their needs. AI tutors can help students stay on course by offering extra assistance. Additionally, technology may alter the locations and methods of instruction for students—possibly even taking the place of certain professors. Teachers can create engaging lessons and other instructional materials with the use of generative AI, as shown by ChatGPT, Google Bard, and other large language models. The introduction of these tools also compels educators to reconsider assignments, assessments, and plagiarism guidelines.

In order to get insights into how to provide better customer service, machine learning algorithms are being included into analytics and CRM systems. Websites now have chatbots integrated into them to offer clients instant assistance. It is anticipated that the swift development of generative AI technologies, like ChatGPT, would have far-reaching effects, including the abolition of jobs, a revolution in product design, and a disruption of business paradigms.

Finance-related applications of artificial intelligence (AI) include data analytics, performance evaluation, forecasting and projections, real-time computations, customer support, intelligent data retrieval, and more. With the help of this collection of technologies, financial services firms may learn from and comprehend consumers and markets more effectively. They can also communicate with them in a way that replicates human intelligence and interactions on a large scale.

Artificial intelligence (AI) is used by the entertainment industry for a variety of tasks, including distribution, fraud detection, scriptwriting, and movie production. Newsrooms can reduce time, expenses, and complexity by streamlining media workflows with the use of automated journalism. Artificial intelligence (AI) is used by newsrooms to help with headline writing, research subjects, and automate repetitive jobs like data entry and proofreading.

Sifting through documents during the discovery phase of a legal case may be quite taxing for people. AI is being used to help automate labor-intensive tasks in the legal sector, which is saving time and enhancing customer service. Law companies utilize computer vision to identify and extract information from documents, natural language processing (NLP) to comprehend information requests, and machine learning to characterize data and forecast results.

The most popular phrases used by security suppliers to promote their goods are artificial intelligence and machine learning, so prospective customers should proceed with care. Nevertheless, artificial intelligence (AI) approaches are being effectively used in cybersecurity for a number of purposes, such as behavioral threat analytics, anomaly detection, and the resolution of the false-positive issue. Machine learning is used by organizations to detect abnormalities and identify suspicious actions that point to dangers in security information and event management (SIEM) software and related fields. AI can detect new and emerging threats far earlier than human staff members and earlier technology iterations by evaluating data, applying logic to find similarities to known harmful code, and alerting users to these threats.

It is possible to employ new generative AI tools to generate application code in response to natural language cues, however it is doubtful that these tools will soon replace software developers because they are still in their infancy. Numerous IT procedures, including as data entry, fraud detection, customer support, predictive maintenance, and security, are also automated with AI.

Robotics integration into the workflow has been pioneered by the manufacturing sector. For instance, industrial robots that were formerly designed to carry out solitary duties and operate independently of human workers are increasingly serving as cobots: More compact, multifunctional robots that work alongside people and assume greater duties in factories, warehouses, and other work environments.

Apart from its essential function in managing self-driving cars, artificial intelligence (AI) technologies are employed in transportation to forecast aircraft delays, regulate traffic, and enhance the safety and efficiency of maritime transportation. Artificial intelligence (AI) is displacing conventional techniques in supply chains to estimate demand and anticipate disruptions. This movement was sped up by the COVID-19 pandemic, which caught many businesses off guard with its effects on worldwide supply and demand for goods.

Chatbots are being used by banks with success to conduct transactions that don’t need for human participation and to inform clients about services and opportunities. Artificial Intelligence (AI) virtual assistants are utilized to reduce and enhance banking regulatory compliance expenses. AI is used by banking institutions to enhance loan decision-making, establish credit limits, and find investment opportunities.

Interesting Infos

With experience in handling major ICT projects, Bronix Technologies provides client oriented professional services, based on international standards, codes, procedures and more, a commitment for excellence

OFFICE

No 3 Stoke Street,

Belmont, Bulawayo

Zimbabwe

(+263) 772 827 991

info@bronixtechnologies.com

Wow, this is a great piece, it was helpful on my dissertation.Thank you!

Thank you Takudzwa. Glad the article was helpful.